Table of Contents

Introduction

Welcome to another thrilling edition of Data Science Demystified! This time, we’re diving into one of the simplest yet highly effective machine learning algorithms—Naive Bayes. Whether you’re classifying emails, analyzing sentiments, or predicting diseases, Naive Bayes stands out for its speed and reliability. Its straightforward nature makes it a favorite among data scientists, while its impressive results cement its place in real-world applications. This will guide you through the implementation of Naive Bayes in Python using real-world datasets. Let’s get started!

Machine Learning with Naive Bayes and Python

Naive Bayes is a classification algorithm based on Bayes’ theorem, which calculates the probability of a class given a set of features. One of its key assumptions is that the predictors are independent of one another. While this assumption may not always reflect real-world data, the algorithm still performs efficiently in many applications.

Naive Bayes is a classic and impactful algorithm in machine learning, particularly for classification tasks. Its appeal lies in its simplicity and speed, making it an excellent choice for both beginners and experienced practitioners. Grounded in Bayes’ theorem, this algorithm predicts class labels by calculating probabilities using prior data and observed features.

Despite its “naive” assumption of feature independence, Naive Bayes consistently delivers impressive results across various fields, from text analysis to medical diagnosis. Let’s explore its key concepts, advantages, practical applications, and limitations.

Why Naive Bayes?

- Ease of Implementation: Naive Bayes is straightforward to implement and understand, even for those new to machine learning.

- Fast and Efficient: It processes data quickly, with linear training and prediction times. This makes it ideal for large datasets and real-time applications.

- High-Dimensional Compatibility: The algorithm handles high-dimensional data, such as text features in spam detection or sentiment analysis, with ease.

- Effective with Small Data: Naive Bayes requires minimal data to deliver reliable predictions, making it suitable for scenarios with limited training samples.

Advantages of Naive Bayes

Naive Bayes has become a popular algorithm in the machine learning world, and for good reason. Let’s explore its key benefits:

- Fast and Efficient Naive Bayes is incredibly quick to train and test. This makes it an excellent choice for tasks that require real-time predictions or when working with large datasets.

- Handles High-Dimensional Data It performs well even with a high number of features, such as in text data where thousands of words might represent each instance.

- Effective with Small Datasets Even with limited data, Naive Bayes can provide accurate predictions by leveraging probability-based reasoning.

- Excels in Text Classification Naive Bayes shines in tasks like spam detection, sentiment analysis, and topic categorization, where text classification is key.

These advantages make Naive Bayes a versatile and reliable choice for many machine learning applications.

Limitations

While Naive Bayes is effective, it’s not without its drawbacks:

- Independence Assumption: Real-world features often depend on one another, reducing the algorithm’s accuracy in complex scenarios.

- Zero Frequency Problem: If a feature value doesn’t appear in the training data, its probability becomes zero, which can skew predictions. Solutions like Laplace smoothing address this.

- Sensitivity to Class Imbalance: Naive Bayes may favor majority classes in imbalanced datasets, affecting minority class predictions.

- Limited Handling of Continuous Variables: Though variants like Gaussian Naive Bayes help, continuous data often require discretization, which can result in information loss.

Applications of Naive Bayes

Naive Bayes might be simple, but its versatility makes it a key player across various fields:

1. Text Classification Imagine sorting through endless emails or analyzing social media trends. Naive Bayes is a superstar in text-based tasks like:

- Filtering spam emails.

- Understanding sentiment in customer reviews.

- Grouping articles by topics. Its ability to handle large vocabularies with ease makes it perfect for such tasks.

2. Medical Diagnosis In healthcare, time and accuracy are critical. Naive Bayes helps predict diseases by analyzing symptoms and patient history. It supports medical professionals by providing data-driven insights for quicker, more precise diagnoses.

3. Recommendation Systems Ever wondered how streaming platforms or online stores know what you like? Naive Bayes powers recommendation engines by predicting user preferences. It enhances personalization, making your online experience seamless and enjoyable.

These applications showcase how Naive Bayes turns raw data into impactful solutions across diverse industries.

Step-by-Step Implementation

Here is the step-by-step implementation of Naive Bayes.

Step 1: As as first step, we need to setup the environment. Please make sure to install the following Python libraries.

pip install numpy pandas scikit-learnStep 2: Import all necessary libraries

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score, classification_report

from sklearn.datasets import load_iris Step 3: Load the data using the built-in Iris dataset.

iris = load_iris()

X = iris.data

y = iris.target Step 4: Split dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)Step 5: Initialize the Naive Bayes classifier and train the model.

# Initialize the Naive Bayes classifier

nb_model = GaussianNB()

# Train the model

nb_model.fit(X_train, y_train)Step 6: It is time to make predictions and evaluate the model performance.

# Make predictions

y_pred = nb_model.predict(X_test)

# Evaluate model performance

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.2f}")

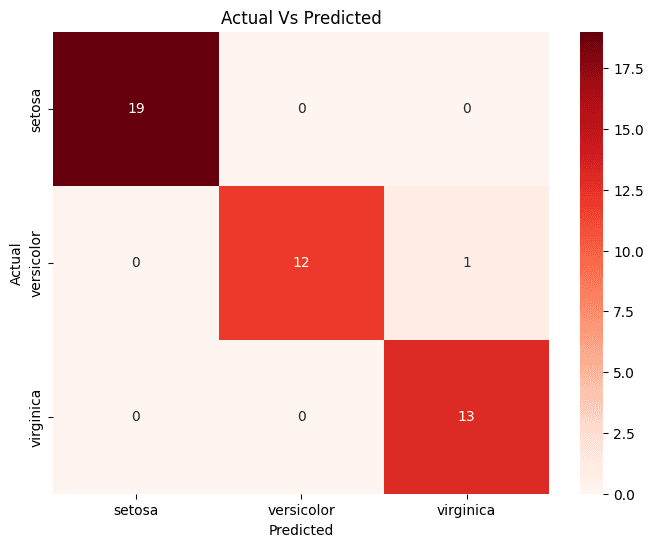

print(classification_report(y_test, y_pred, target_names=iris.target_names))Step 7: In the last step, generate the confusion matrix and visualize the results. While Naive Bayes does not directly provide visualization capabilities, you can visualize the classification report using libraries like seaborn or matplotlib.

#generate confusion matrix

cm = confusion_matrix(y_test, y_pred)

#plot the results using seaborn

plt.figure(figsize=(8, 6))

sns.heatmap(cm, annot=True, fmt="d", cmap="Blues",

xticklabels=iris.target_names, yticklabels=iris.target_names)

plt.xlabel("Predicted")

plt.ylabel("Actual")

plt.title("Actual Vs Predicted")

plt.show()

Practical Insights

Understanding which Naive Bayes variant to use can significantly improve your model’s performance. Here’s a quick guide:

- Gaussian Naive Bayes: If your data features are numerical and you assume they follow a bell-shaped Gaussian distribution, this is your go-to choice. It’s widely used in applications like continuous data classification.

- Multinomial Naive Bayes: When working with text data or discrete count data—such as word frequencies in a document—this variant shines. It’s particularly effective in tasks like spam detection or document categorization.

- Bernoulli Naive Bayes: For binary or Boolean data, such as presence/absence indicators in text classification, this version works best. It’s often employed in sentiment analysis or when dealing with yes/no datasets.

Additionally, text data preprocessing is crucial for success. Steps like:

- Tokenization (breaking text into words),

- Stemming (reducing words to their base forms), and

- Vectorization (converting text into numerical data with tools like TfidfVectorizer or CountVectorizer) help transform raw data into a format that Naive Bayes algorithms can efficiently process.

With these insights, you can better tailor Naive Bayes to your dataset and problem domain, ensuring more accurate and meaningful results.

Applications of Naive Bayes

Naive Bayes has proven itself as a versatile tool in many impactful domains. Here are some real-world applications where it truly shines:

- Spam Email Detection: Naive Bayes is widely used to classify emails as spam or non-spam by analyzing the frequency of certain words or phrases. It’s the backbone of many email filtering systems.

- Sentiment Analysis: By evaluating the text content, Naive Bayes helps determine whether a message, review, or tweet expresses positive, negative, or neutral sentiments. This is invaluable for understanding customer opinions.

- Medical Diagnostics: With its ability to classify data efficiently, Naive Bayes is employed in healthcare to predict diseases based on a set of symptoms, aiding in faster and more accurate diagnostics.

- Recommendation Systems: Whether it’s suggesting products, movies, or articles, Naive Bayes plays a role in categorizing user preferences and tailoring recommendations accordingly.

Click here further reading on how ML can help in fraud detection

Thanks to its efficiency and ability to handle sparse data, Naive Bayes continues to be a go-to algorithm in these modern AI-driven solutions.

Closing Thoughts

Naive Bayes exemplifies the effectiveness of simplicity in machine learning. By mastering this algorithm, you can confidently address a wide range of classification problems with minimal computational effort.

Now it’s time to apply what you’ve learned! Consider implementing Naive Bayes on datasets that are relevant to your field or interests. You can explore datasets on Kaggle or experiment with textual data from open-source repositories.

If you enjoyed this guide or have any questions, please share your thoughts in our LinkedIn community for data scientists and AI enthusiasts. Let’s collaborate and learn together!

Thank you for joining us in this week’s edition. Stay tuned for more in-depth explorations of machine learning algorithms and practical guides.

Published on LinkedIn on 8th December 2024

Yay google is my king helped me to find this outstanding internet site! .